One recommended approach to improving an application’s performance is caching. We have previously published📜 an article about caching concepts and benefits focusing on Spring Boot.

In this article., we will discuss 7 techniques for optimizing caching in Spring Boot applications.

Table of Contents

1- Identifying Ideal Candidates for Optimal Performance

First and foremost, we need to know the ideal candidates for caching. The first thing that comes to our mind is caching costly and time-consuming operations such as querying a database, calling a web service, or performing a complex calculation. These are the correct candidates, but defining some general characteristics of the ideal candidates for caching is helpful. This preparation will help us identify these characteristics in our application:

- Frequently accessed data: Data that is accessed frequently and repeatedly are good candidates to cache.

- Costly fetches or computes: Data that requires significant time or computational resources to retrieve or process.

- Static or Rarely Changing Data: Data that does not change often, ensuring that cached data remains valid for a longer period.

- High Read-to-Write Ratio: Data that is accessed more often than it is modified or updated can be cached effectively. This guarantees that the benefits of quick read access from the cache surpass the costs associated with its update.

- Predictable Patterns: Data that follows predictable access patterns, allowing for more efficient cache management.

These characteristics can help us effectively identify and cache data that will yield our application’s most significant performance improvements.

Now that we know how to find Ideal Candidates for caching, we can start to enable caching in our Spring Boot application and use its annotations (or programmatically), I discussed how to use caching in Spring Boot and how Digma can help us find cache misses or identify caching candidates in detail in this article:

2- Cache Expiration

Setting appropriate expiration policies ensures that our cached data remains valid, up-to-date, and memory-efficient. It will optimize performance and consistency in your Spring Boot applications.

I recommend these approaches to manage cache expiration in a Spring Boot application:

Eviction Policies:

There are famous eviction policies:

- Least Recently Used (LRU): Evicts the least recently accessed items first.

- Least Frequently Used (LFU): Evicts the least frequently accessed items first.

- First In, First Out (FIFO): Evicts the least frequently accessed items first.

Spring Cache abstraction does not support these eviction policies, but you can use the cache provider’s specific configurations depending on which provider you choose. By carefully selecting and configuring eviction policies, you can ensure that your caching mechanism remains efficient, effective, and aligned with your application’s performance and resource utilization goals.

Time-based Expiration:

Defining the time-to-live (TTL) interval that clears the cache entries after a certain time period is different for each cache provider. For example, in the case of using Redis for caching in our Spring Boot application, we can specify the time-to-live using this config:

spring.cache.redis.time-to-live=10mIf your cache provider does not support time-to-live, you can implement it using the @CacheEvict annotation and a scheduler like this:

@CacheEvict(value = "cache1", allEntries = true)

@Scheduled(fixedRateString = "${your.config.key.for.ttl.in.milli}")

public void emptyCache1() {

// Flushing cache, we don't need to write any code here except for a descriptive log!

}Custom Eviction Policies:

By defining custom expiry policies based on an event or situation for a single cache entry or all entries, we can prevent cache pollution and keep it consistent. Spring Boot has different annotations to support custom expiry policies:

@CacheEvict: Remove one or all entries from the cache.

@CachePut: Update the entries with a new value.

CacheManager: We can implement a custom eviction policy using Spring’s CacheManager and Cache interfaces. Methods such as evict(), put(), or clear() can be used to do so. We can also access the underlying cache provider to have more features by using the getNativeCache() method.

The most important thing about custom eviction policies is finding the right place and conditions to evict entries.

3- Conditional Caching

Conditional caching, alongside eviction policies, plays an important role in optimizing our caching strategies. In some cases, we don’t need to store all data of a specific entity in the cache,

conditional caching ensures that only data that meets specific criteria is stored in the cache.

This prevents unnecessary data in the cache space, thereby optimizing resource utilization.

Both @Cacheable and @CachePut annotations have the condition and unless attributes that allow us to define conditions for caching items:

- Condition: Specifies a SpEL (Spring Expression Language) expression that must be evaluated to be

truefor the data to be cached (or updated). - Unless: Specifies a SpEL expression that must be evaluated as

falsefor the data to be cached (or updated).

To clarify, take a look at this code:

@Cacheable(value = "employeeByName", condition = "#result.size() > 10", unless = "#result.size() < 1000")

public List<Employee> employeesByName(String name) {

// Method logic to retrieve data

return someEmployeeList;

}In this code, the list of employees will only be cached if the size of the result list is greater than 10 and less than 1000.

The last point is that similar to the previous section, we can also implement conditional caching programmatically using the CacheManager and Cache interfaces. This provides more flexibility and control over caching behaviour.

4- Distributed cache vs. Local Cache

When we talk about caching, we usually think of distributed caches such as Redis, Memcached, or Hazelcast. In the era of the popularity of microservices architecture, local caching also plays a big role in improving application performance.

Understanding the differences between local cache and distributed cache can help you choose the right strategy to optimize caching in our Spring Boot application. Each type has its pros and cons, which are essential to consider based on your application’s needs.

What is local cache?

A local cache is a caching mechanism in which data is stored in memory on the same machine or instance where the application is running. Some well-known local caching libraries are Ehcache, Caffeine, and Guava Cache.

A local cache allows for extremely fast access to cached data because it avoids network latency and overhead associated with remote data retrieval (Distributed cache). A local cache is generally easier to set up and manage than a distributed cache and doesn’t require additional infrastructure.

When should you use local cache vs. distributed cache?

A local cache is suitable for Small applications or microservices where the dataset is small and fits comfortably within the memory of a single machine. It is also applicable for scenarios where low latency is critical, and data consistency across instances is not a major concern.

On the other hand, a distributed cache system is appropriate for large-scale applications with significant data caching needs, for which scalability, fault tolerance, and data consistency across multiple instances are critical.

Implementing Local Cache in Spring Boot

Spring Boot supports local caching through various in-memory cache providers like Ehcache, Caffeine, or ConcurrentHashMap. The only things we need to do are add the required dependency and enable caching in our Spring Boot application. For example, to have local caching using Caffeine, we need to add these dependencies:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-cache</artifactId>

</dependency>

<dependency>

<groupId>com.github.ben-manes.caffeine</groupId>

<artifactId>caffeine</artifactId>

</dependency>Then enable caching using the @EnableCaching annotation:

@SpringBootApplication

@EnableCaching

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}In addition to general Spring Cache configs, We can also configure the Caffeine cache with its specific configs like this:

spring:

cache:

caffeine:

spec: maximumSize=500,expireAfterAccess=10m5- Custom Key generation strategies

The default key generation algorithm in Spring cache annotation usually works:

If no params are given, return 0.

If only one param is given, return that instance.

If more the one param is given, return a key computed from the hashes of all parameters.

This approach works well for objects with natural keys as long as the

hashCode()reflects that.

But in some scenarios, the default key generation strategy does not work well:

- We need meaningful keys

- Methods with Multiple Parameters of the Same Type

- Methods with Optional or Null Parameters

- We need to include Contextual data such as locale, tenet ID, or user role in the key to make it unique

Spring Cache provides two approaches to define a custom key generation strategy:

- Specifies a SpEL (Spring Expression Language) expression to the

keyattribute that must be evaluated to generate a new key:

@CachePut(value = "phonebook", key = "#phoneNumber.name")

PhoneNumber create(PhoneNumber phoneNumber) {

return phonebookRepository.insert(phoneNumber);

}- Define a bean that implemented the

KeyGeneratorinterface and then specify it to thekeyGeneratorattribute:

@Component("customKeyGenerator")

public class CustomKeyGenerator implements KeyGenerator {

@Override

public Object generate(Object target, Method method, Object... params) {

return "UNIQUE_KEY";

}

}

///////

@CachePut(value = "phonebook", keyGenerator = "customKeyGenerator")

PhoneNumber create(PhoneNumber phoneNumber) {

return phonebookRepository.insert(phoneNumber);

}

Using custom key generation strategies can significantly enhance cache efficiency and effectiveness in our application. A well-designed key generation strategy ensures that cache entries are correctly and uniquely identified, minimizing cache misses and maximizing cache hits.

6- Async cache

As you might have noticed, the Spring cache abstraction API is blocking and synchronous, and if you are using WebFlux stack using Spring Cache annotations such as @Cacheable or @CachePut will cache reactor wrapper objects (Mono or Flux). In this case, you have 3 approaches:

- Call the

cache()method on the reactor type and annotate the method by Spring Cache annotations. - Use the async API provided by the underlying cache provider (if supported) and handle caching programmatically.

- Implement an asynchronous wrapper around the cache API and make it async (if your cache provider does not support it)

However, after releasing Spring Framework 6.2, if the cache provider supports async cache for WebFlux projects (e.g. Caffeine Cache):

Spring’s declarative caching infrastructure detects reactive method signatures, e.g. returning a Reactor

MonoorFlux, and specifically processes such methods for asynchronous caching of their produced values rather than trying to cache the returned Reactive StreamsPublisherinstances themselves. This requires support in the target cache provider, e.g. withCaffeineCacheManagerbeing set tosetAsyncCacheMode(true).

The config will be super simple:

@Configuration

@EnableCaching

public class CacheConfig {

@Bean

public CacheManager cacheManager() {

final CaffeineCacheManager cacheManager = new CaffeineCacheManager();

cacheManager.setCaffeine(buildCaffeineCache());

cacheManager.setAsyncCacheMode(true); // <--

return cacheManager;

}

}7- Monitoring cache to find bottlenecks

Monitoring cache metrics is crucial for identifying bottlenecks and optimizing caching strategies in your application.

The most important metrics to monitor are:

- Cache Hit Rate: The ratio of cache hits to total cache requests indicates effective caching, while a low hit rate suggests that the cache is not being utilized effectively.

- Cache Miss Rate: The ratio of cache misses to total cache requests indicates that the cache is frequently unable to provide the requested data, possibly due to insufficient cache size or poor key management.

- Cache Eviction Rate: The frequency at which items are evicted from the cache. If eviction rates are high, it indicates that the cache size is too small or the eviction policy is not well-suited to the access pattern.

- Memory Usage: The amount of memory being used by the cache.

- Latency: The time taken to retrieve data from the cache.

- Error Rates: Metrics related to the load on cache servers, such as requests per second.

How to monitor Cache Metrics in Spring Boot

Spring Boot Actuator auto-configures Micrometer for all available Cache instances on startup. We need to register caches created on the fly or programmatically after the startup phase. Check this link to make sure that your cache provider is supported.

First, we need to add Actuator and micrometer dependencies:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>Then enable the Actuator Endpoints:

management.endpoints.web.exposure.include=*Now, we can see the list of configured caches using this endpoint /actuator/caches, and for cache metrics, we can use the following ones:

/actuator/metrics/cache.gets/actuator/metrics/cache.puts/actuator/metrics/cache.evictions/actuator/metrics/cache.removals

How Digma can help us to Optimize Caching in Spring Boot

Digma is an observability platform that helps teams understand and optimize their applications by providing insights into runtime behavior. When it comes to optimizing caching, Digma can play a crucial role by offering detailed analytics, monitoring, and actionable recommendations.

Insights: Digma monitors the application’s metrics in real-time and quickly identifies issues like cache misses and other caching-related issues.

Detailed Metrics and Analytics: Digma collects and visualizes detailed metrics, providing a comprehensive view for us to monitor our application behavior during the development inside our IDE.

Integration with Development Workflow: Digma integrates with your existing development and CI/CD workflows, making incorporating cache optimization into your regular development practices easy.

Let’s continue with two examples that show how Digma helps us to optimize caching in practice:

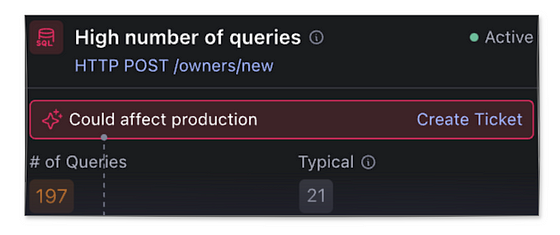

1- Identifying caching candidates: This functionality offers a comprehensive view of how caching impacts the processing of requests within an application. It identifies the specific part of a request that can benefit from caching.

2- Finding inefficient queries: This feature focuses on detecting inefficient database queries within an application that are time-consuming and could significantly benefit from caching. For example, the high number of queries insight that is identified by Digma indicates that the current endpoint is triggering an abnormal number of DB queries. This can be typically resolved with caching or using more optimal queries. Another is the Query Optimization Suggested insight that found a query is especially slow compared to other queries of the same type running against the same DB. We can optimize this query or cache it.

Digma’s high number of queries insight which can be typically resolved with caching

By leveraging Digma’s advanced observability and analytics capabilities, you can gain deep insights into your caching performance, quickly identify and resolve issues, and continuously optimize your caching strategy.

Final Thought: Optimize Caching in Spring Boot

In our previous article, “10 Spring Boot Performance Best Practices”, we saw caching as a great way to improve the Spring Boot application performance. During this article, we learned 7 techniques for optimizing caching in the Spring Boot applications. Optimizing caching is crucial because it directly enhances application performance and scalability by reducing the load on backend systems and speeding up data retrieval. Efficient caching strategies minimize latency, ensure faster response times, and improve the overall user experience.