A Continuous Feedback (CF) Platform for Developers

These last two decades have been formative to a monumental degree for the Software Development craft. I feel fortunate to have been at the heart of Engineering organizations throughout that period, working side by side with smart people excited by the prospect of trying out new ideas and technologies.

Usually, the significance of such transformative periods is clear only in hindsight. In my case, I was deeply appreciative of the fact that we were all pioneers and that the industry was changing. So much was happening! Ideas, technologies, processes, and practices: BDD, TDD, DDD, Event Sourcing CQRS, CI, CD were all new concepts. The air was abuzz with new ideas that everyone wanted to embrace and explore.

I am not here to talk about all of these great things that happened to the Software Industry, though. I’m sure that many books were written and will yet be written on the topic. I have nothing much to add except maybe my own personal sense of awe at the scope and reach of these changes.

I’m here to talk about something that didn’t happen. A gap in the infinite DevOps loop left incomplete and how it relates to continuous feedback.

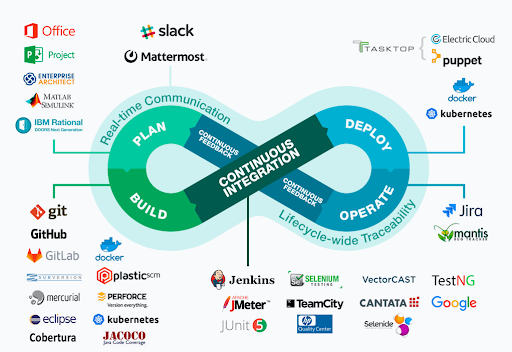

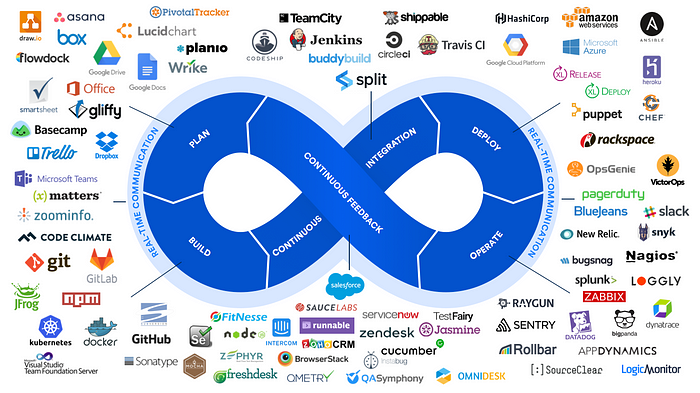

To understand what is missing I ask that you look at the below diagrams, captured from two different websites. Can you tell which segment in the DevOps process has no real tools attached to it?

Countless platforms were created to solve everything related to building software, continuously testing it, deploying, and operating it. But taking in this map of the ecosystem, it’s hard not to notice a glaring omission. Whatever happened to Continuous Feedback?

CI and CD are on everyone’s lips these days, but when was the last time you heard someone throwing the term CF around? Does anyone use a Continuous Feedback platform? What type of information really flows back from production into the hands of developers to make the next code changes easier, more correct, and smarter?

Having most of my background in .NET and Java, the concept of REPL blew my mind in the early 2010s. I was getting into dynamically typed languages like Ruby and Python and beginning to challenge some of my previous assumptions about coding. Removing the tedious build step, which was extremely slow in my 2010 enterprise app, seemed like a big upgrade. Instant feedback from code to console — that was just Science Fiction.

REPL, for anyone less familiar with the term, or Read-Eval-Print-Loop, is an interactive Shell that lets you try out and evaluate commands in real-time. For me, it meant fewer “waiting for the build” coffee breaks. It shortened the time from code conception to the inevitable reality check of runtime errors. [Before anyone interprets this to be a criticism of Java or .Net — bear in mind, that this was over ten years ago. Today builds are faster, and there are many ways to test your code much more rapidly].

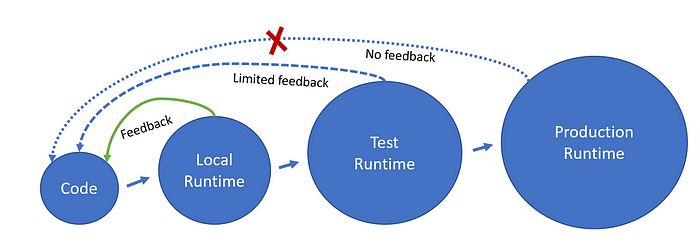

There is still a big gap, however, between that local run, and what actually happens when the code runs in production. Everyone knows the phrase ‘works on my machine’. It’s a true statement. When the developer tests the code in the sterile lab of their IDE, it does (almost) always work.

The reasons are obvious. Factors such as concurrency conditions, timing and delays, request parameters, 3rd party services behaviors, and data will often determine how the code will behave. These conditions can be simulated to a very limited extent in testing, but are completely absent when we are designing code. None of the feedback mirrors in our development cockpit is adjusted to cover this huge blind spot in our view.

Here’s the thing that struck me: These super fancy feedback side-view mirrors already exist.

After all, Your code, the one you’ve been writing and modifying today, is already running in production. Observability, logging, tracing and metric gathering platforms are probably even now collecting a gazillion terabytes of data about it. The code in production is performing badly or magnificently, it is throwing runtime errors that are predictable or not, scaling in and out, servicing different use cases, and exhibiting all sorts of behaviors. It’s just that no insights or learnings based on that collected information are currently integrated into the code design cycles.

I am thinking of Google Analytics, Amplitude, and other feedback platforms for Product Managers, and contrasting that with the meager selection of options available to a developer seeking to garner actionable insights from the test and prod environment. There is no reason this part of the loop does not exist, except that we have not yet built it.

From observability to devservability: Continuous feedback

For some reason, the observability platforms we are using today all went for the usual-suspect use cases — detection, troubleshooting, and production ops. Perhaps because many of these tools originally targeted Ops and IT teams, or that’s just where the impact seemed more obvious.

Everything is ‘shifting left’: testing, security, operations, etc. More and more aspects of the release process are now owned by the team which is ultimately accountable for them. For observability tools, it seems like they are ‘pushing left’ instead of doing any type of shifting.

What I’d like to see is how can we make data more relevant not in the day or even minutes after something went wrong, but when the code is still being designed. How can we make important code design decisions more informed and leverage some of the data we are already collecting?

It is all about Context

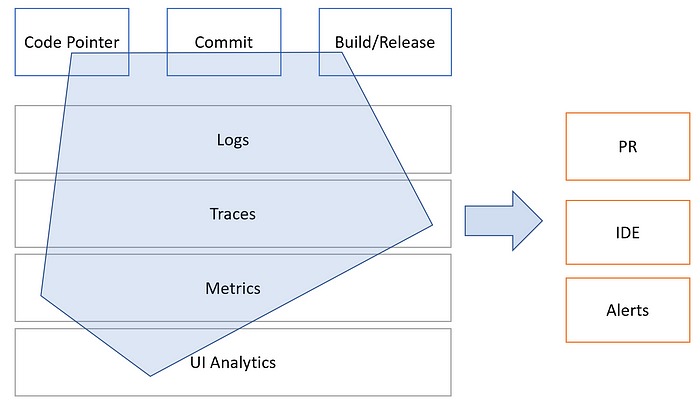

Context is the key to making it work. Most of the data is already being emitted and can be collected and analyzed, we just need a few more threads to tie it all together. To connect the dots, we need to triangulate traces, logs, and metrics with commit identifiers, pointers to code locations, and released binaries.

With this context, we can start generating meaningful analytics and inspect interesting trends that can make our changes more informed and improve our understanding of the system runtime.

I should emphasize that this is not about raw data. Connecting your code directly with your ELK stack, for example, would be far from useful. Production data is too massive and sensitive, and would just overload the developers who would not be able to see the forest from the trees. Trends, analytics, and predictions are the key to making this new backward propagation of information useful.

Coding with production continuous feedback

Here’s a glimpse: Imagine editing a function in your IDE with full awareness of the data workflows that actually pass through it. Visibly seeing where people are reaching your code from, all the way back to the browser pages. Conversely, it might be interesting to learn the function was never hit in any production flow in months.

It’s almost like color being suddenly applied to a black and white movie scene. What parameter values are coming into this function, and how are they different between different flows of origin? What types of runtime exceptions are being encountered and at what rate? We’ll be able to right-click into the runtime contextual data and analytics at will.

Next, we can correlate all of that data to detect trends. Is my function performing badly when the response JSON is large? Perhaps the size of a specific table directly affects response times, something we should be worrying about sooner rather than later. Is there an upward tick in test or prod after a specific commit?

We will probably get questions to answers we never thought to ask. We’ll be able to think twice about sending an array of identifiers received as input to a query, now that we know it is thousands of members long in some production workflows. We’ll be able to view the possible affected components of any given change in a PR to ensure testing coverage vs. actual usage. We’ll be able to iteratively evaluate the impact of code changes as they are rolled out to extend the definition of done.

Finally, it turtles down. The same type of analytics that is available to me in the function that I am editing will be equally as useful to understand in other functions, packages, and microservices I’m consuming. The runtime behavior data would allow me to understand what to expect, and how to leverage other people’s code in a smarter way.

We have the technology

Technology and process advances are sometimes tied together.

Containers, fast and immutable, made it possible to accelerate testing and made continuous deployment pipelines feasible. Similarly, I believe the state of observability standards and technologies today (OpenTelemetrics, infrastructure APIs, Machine Learning ) allows us to advance toward real Continuous Feedback.

I started this blog post with a nostalgic spree about innovation in Software over the past two decades. That same sense of pioneering and unexplored potential is what draws me to this topic now. For developers, continuous feedback could be as revolutionary as the introduction of IntelliSense in modern IDEs. This ‘runtime IntelliSense’ can change how we write code to the extent it would be hard to imagine how we ever got by without it.

As you can probably tell, this topic is near and dear to my heart. Over the next months, I plan to continue developing a Continuous Feedback platform, posting about some of the technical and product considerations. I am definitely looking for early adopters who enjoy fiddling with new technologies and processes. If you have some ideas on the subject, or if you’re also excited about testing out new concepts (first theoretically, but then more practically) and being a part of something new, please do reach out — I’ll be happy to hear from you, either on social or email: roni.dover@gmail.com.

Update 4/13/22: I was actually able to get a small team together and start a platform for exactly this kind of continuous feedback! Take a look at

Update 9/19/23: We’re officially launching the Digma Continuous Feedback platform for developers.

https://github.com/digma-ai/digma or check out how Digma works: Here