It’s easy to see why we need a “definition of done”, it is one of those things that seem so obvious once you adopt them. Without the ׳done׳ definition, communication regarding development progress becomes ambiguous. If we are not explicit about what is required to complete a feature, the team might not account for other types of work including review, testing, assuring maintainability, deployment, etc.

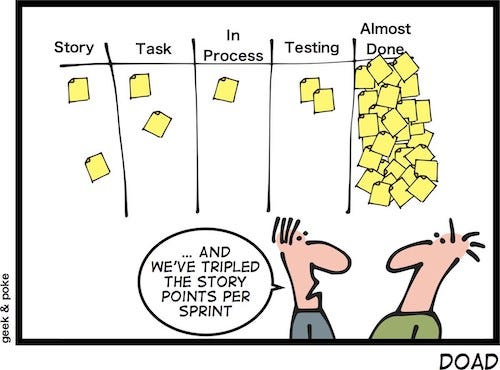

In many of the Engineering teams I worked with, the developers would gravitate towards the more appealing coding tasks, leaving these other topics unaddressed. Eventually, we would discover that what was demoed as ‘done’ isn’t really ‘done done’, as the expression goes. The menial remaining tasks dismissed as small finishing touches turn out to require major effort disrupting the entire plan.

The ‘Definition of Done’ is awesome, important, we all need it.

It’s Also Misleading and Often Misinterpreted

The fact of the matter is that the definition of done does not define ‘done’ at all. The feature that was just released is often not done, not by a long shot. Unless we take that into account, we would again completely miss the mark in our resourcing and planning.

https://cdn.embedly.com/widgets/media.html?type=text%2Fhtml&key=a19fcc184b9711e1b4764040d3dc5c07&schema=twitter&url=https%3A//twitter.com/jbogard/status/1508208674627596298&image=https%3A//i.embed.ly/1/image%3Furl%3Dhttps%253A%252F%252Fabs.twimg.com%252Ferrors%252Flogo46x38.png%26key%3Da19fcc184b9711e1b4764040d3dc5c07

I am reminded of an old post by

Daniel Terhorst-North that somehow stuck in my mind even though it has been more than a decade. Dan North (I’m a big fan!), makes the point that Software is really a very young industry that does not have the same type of experience and practices to draw on as more traditional crafts. What I would add to that is that this is especially impactful because software is also very different from other industries in how it is developed.

Software Development is Not Always Linear

While we have borrowed practices, terminologies, and metaphors from other professions (have you noticed the staggering amount of software ‘architects’ about?), Software is different. It is different from constructing a building, manufacturing a car, or welding steel. It is different because often the software we create isn’t one-and-done. Software is a continuum.

It is also impossible to develop it from start to finish. To know what we need to develop we need feedback and feedback takes time. Traction does not grow overnight, users will not adopt features right away. We try to assess the usability preferences, performance requirements, and intended usage of the functionality we deliver. It is too bad that users will forever stray from the happy path we’ve laid out before them.

Only when software features interact with the real world, can we understand where they are on the maturity curve, what’s missing, and what needs to be made better. Only at that point in time is it possible truly estimate what ‘done’ actually means for that feature.

The Definition of ‘Medium Rare’

When the development team decided they were ‘done’ or even ‘done done’, what they actually meant was that the feature is “mature enough to be deployed into production”. More often than not, in my experience, this is not how the rest of the organization perceived that milestone.

And this is where the true harm of the DOD becomes apparent. I’ve seen many Product Managers, upon hearing the word ‘done’, hurry to advance the roadmaps tokens on the map. Biased towards rolling out new functionality that shows more ‘progress’, any feedback that eventually will arrive gets filed off as ‘technical debt’ or ‘enhancements’.

In this manner, optimizations, refactoring, and usability improvements based on real-world usage are fitted into a separate backlog. That backlog is nobody’s favorite.

Developing Towards Feature Maturity

Defining what’s good enough for initial deployment is important. We all need a DOD in our developer lives. However, additional practices need to be put in place to make the remaining effort be rightly recognized and accepted as a part of the feature development.

Here are a few practices that I’ve seen being applied successfully to support asynchronous development:

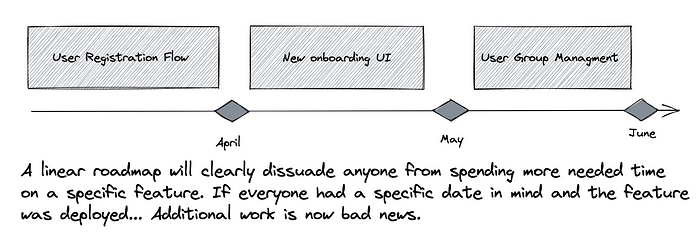

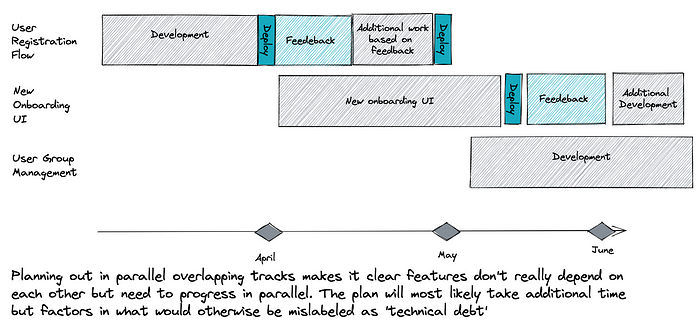

1.Plan feature progress as parallel tracks rather than linear paths, and plan for feedback and improvements in advance. Creating a work plan which has a Gantt-like line between feature ‘A’ feature “B’ will automatically bias the entire organization to get to ‘B’ as fast as possible. On the other hand, having two separate tracks with both showing progress will convey parallel progress.

I’m the last person to comment on the estimate vs. no estimations debate. However, if some plans are put together based on assumptions, make sure these assumptions include feedback cycles.

2. Ensure your development tools can provide feedback, otherwise, none of these maturity issues will bother you since you just won’t know about them. When shipping out features developers should highlight what it is they want to track about them and make sure that information can reach them.

Technical code level feedback is just as important as product feedback. How is the code performing? Which code segments get used and which are never hit? What errors are the users hitting? Many maturity gaps get lost because they are too technical for Product Managers and developers are not tracking them after the feature was released.

3. Continuously track feature maturity, along with the level of confidence in that maturity. Major features should always be on the dashboard, even after being delivered. In order to properly prioritize product managers need to really be able to assess existing feature gaps. Prioritization is key, and it needs to happen based on real data.

Conclusion — YMMV

Over the last few years, asynchronous functions became the leading way to write code in multiple languages. With that in mind, asynchronous development and release processes might not seem so foreign.

How does your organization deal with the uncertainty of non-linear development and late-arriving feedback? Have you encountered any of the biases described here? How are you tracking feature maturity? I’m very interested in hearing about your own experiences, and the solutions being applied by organizations in the field.

Want to Connect?You can reach me on Twitter at @doppleware or here.

Follow my open-source project for continuous feedback at https://github.com/digma-ai/digma