Eran is one of the most forward-thinking people I know. Two years ago, he tried (unsuccessfully) to convince me that genAI changes everything. In this talk, I tried to convey a similar sentiment I have about MCPs;

Today, we’re diving into MCP, Model Context Protocol. If you’ve somehow missed the buzz around it, don’t worry. We’ll break down what it is and why it matters. I’m thrilled to have Iran Bruder here with me. I’ve known him for years as one of the most passionate voices in generative AI. He was talking about this stuff long before it was mainstream, and honestly, he’s been eerily right about where things are heading. When we first met, he was practically a lone prophet of AI, cornering CTOs at dinners to talk about agents while everyone else was still catching up. I’m Ronnie, a longtime developer and currently building an AI-integrated observability tool called Digma. I’ve lived through several major shifts in software—from agile to CI/CD—and I can confidently say this one feels the most disruptive yet. So let’s unpack MCP together: what it is, where it came from, and why it just might change how we build software.

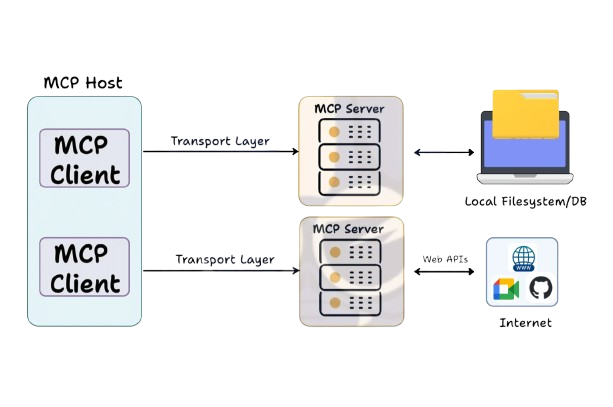

So what happens is, with MCPs, that whole spectrum changes. You’re no longer limited to either building highly generalized workflows or forcing users to create their own advanced dashboards. Instead, you enable the agent, your assistant essentially, to figure out what the user wants and build that experience dynamically by reasoning over the available data and the connected tools. It can even call remote MCP compatible services to do things your tool doesn’t support natively.

That’s a huge shift in how we think about product UX in developer tools, especially in complex domains like observability. Instead of designing fixed flows, you’re designing for fluid collaboration between the agent, the tool, and external services, all orchestrated in a way that feels personalized and scalable.

That burden of using our UI and dashboard—of accessing data the way we decided to present it—all of that falls on the user. And often it’s a very manual process.

Let’s take an example. Let’s say I want to review my code, and observability is relevant for that. It falls on me to check dashboards, review the pull request, and cross-reference everything. Just to clarify—by observability, I mean the information we can gather about what happens when our application runs. That includes logs, traces, metrics—anything that gives us insight into our code at runtime, whether in pre-production or production.

This data is incredibly useful, but accessing it usually requires a lot of manual effort. And as a result, most people just don’t do it. So if I’m doing a code review and I want to dig deeper, I’d have to check logs, traces, metrics, dashboards, history, and try to identify anomalies—like if something behaves differently before caching kicks in. All that reasoning is left to the user.

That’s the default today. You can only expose data in certain ways, and you can’t predict exactly what information a user will need or in what context. And even if you could, there’s still the mental load. We’re human. It’s a lot to think about. You might decide, “Okay, I’ll deal with this tomorrow,” and push forward.

Even more importantly, the answer doesn’t lie just in observability. There are many overlapping domains—code, version control, GitHub history, Jira tickets, Kubernetes tools, maybe groundcover, and others. When you describe all of that, it doesn’t sound like a normal workday—it sounds like a crisis mode. You can’t handle all that every day.

You probably just limit yourself to what you’re mentally capable of digesting. And that might be enough. When we say “enough,” we’re not being philosophical—it might genuinely be good enough for today or the week. Your peer or manager might say “Good job,” but none of us had the full picture.

What we’re describing is the need to synthesize data from all these different sources. You need to reason about what’s meaningful, figure out if something is a root cause, and use all of that to fix or improve something—in this case, reviewing a pull request.

And because of that effort, most people won’t do it unless they’re forced to—like when production is down. Then everyone stops what they’re doing and goes through all the steps I just described. But in day-to-day work, we don’t have the time, focus, or sometimes even the expertise to do all that properly without others joining in.

This is a case study in the limitations of human-centric applications. Observability tools like APMs—application performance monitoring tools—have built interfaces to help developers, DevOps, SREs, etc. But being designed for humans, they’re constrained by human limits. You rely on users working with the UI and interpreting generic data to solve very specific problems. The burden always falls back on them.

This is where MCPs change everything. Suddenly, we’re not building for humans first—we’re building for agents. My role as a product manager no longer revolves around building the best UI to help a human navigate a limited dataset. I don’t need to force a complete match between what a user needs and what my product supports.

Now, there’s another player in the room: the agent. The agent can reason, decide, automate, and pull in data from many different sources. My mind-blowing moment this year was asking my agent—using Cursor at the time—to help me review my code changes. After working on our own MCP implementation, I asked it to help, and it just started doing all of those steps… we are on minute 20:00 – you can just continue watching the video or check out our MCP page and our YouTube channel for more videos around MCPs.

Get Early Access to Digma MCP server: Here

Digma makes coding with AI smarter

Using a built in MCP Server Digma leverages the data in your APM dashboards to assist the AI agent during code reviews, code and test generation, fix suggestions etc.