AI agents are slowly becoming the world’s most prolific coders. Whether it’s suggesting, completing, scaffolding, or generating entire classes. But as this trend accelerates, a hard question surfaces:

How trustworthy is your AI coding companion

It’s easy to fall into the trap of thinking that as long as the code compiles and the tests pass, we’re good to go. Unfortunately, the agent’s occasional strokes of genius are still often accompanied by moments of mediocrity and sometimes even embarrassingly silly mistakes. Like the grotesque generated images of people with the wrong number of fingers or impossibly angled limbs, generated code often suffers from the same shortcomings of an imperfect AI.

Let there be no mistakes, this is not a Luddite protest about generative AI. AI models are huge. They can become a force multiplier to the team’s productivity and offload many coding tasks from seasoned developers. However, they have yet to shake off some quirks and the propensity to make frustratingly stupid errors, even so often. This is further exasperated by an almost incurable propensity to please. A kind of can-do attitude that has plagued models from the early days of ChatGPT and is familiar to any user of the platform. Given a question, the answer always gravitated towards the positive, as numerous related memes demonstrate, preventing critical thinking often required in coding processes.

The Hidden Risks of AI Code

We’re already seeing real-world problems: duplicated logic, suboptimal implementations, deceitful tests, and subtle bugs that only show up in production more often than usual. Not because the AI is malicious, but because it graciously combines being imperfect and inspiring confidence. At times, the execution is lacking; other times, it is simply missing vital context about the architecture, stack, or intended usage.

Ultimately, the development team is the one responsible, it has to feel comfortable delegating coding tasks to AI agents and enforcing the right validation methods. Extensive reviews, more testing, and multiple iterations of code generation can help mitigate code reliability issues, but can also undercut some of the productivity gains. What are the alternatives?

Responsible AI Begins with Feedback

Responsible AI adoption means building feedback loops that validate the AI’s suggestions at every stage—from correctness to scalability and clean code. Feedback works well with AIs as it allows them to leverage their autonomy to rethink, reason, and improve. The more feedback the agent is provided with, the better the changes it can produce to great code.

From Manual to automatic feedback

There are multiple types of feedback you can leverage to improve AI coding results. However, the best type of feedback is a feedback loop that doesn’t involve humans at all. Leaving the AI agent to work on improving, while developers can invest their time in other tasks.

- Make the AI write and execute unit and integration tests

- Test should be the norm: Along with the code, the AI should be asked to write a comprehensive unit test covering normal and edge-case behavior.

- Incremental improvement: Request the AI to continuously rerun tests and fix any failing code to reach a passing result.

- Avoid a false sense of confidence: Need to take into account that tests can be misleading, often having green tests does not guarantee a quality or even correct result.

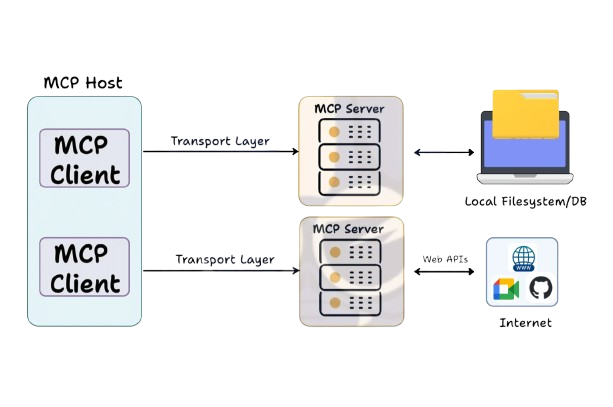

2. Use MCPs to provide real-world feedback:

- Ground code with real-world observability: This is where Dynamic Code Analysis and tools like Digma and can fetch relevant observability results. The Digma MCP Server lets your AI agent access runtime and performance context during code reviews, test generation, fix suggestions, and more. Instead of relying solely on training data, the AI gets real-time behavioral feedback from your own APM dashboards. That means better fixes, smarter test cases, and fewer regressions sneaking into prod.

- Let the AI decide when and how to use the data, but enforce with rules: Have standard rules for code review, test generation, bug fixes etc. that leverage real world data the agent can use.

3. Humans still need to review the code, but they can use AI

- Conduct peer reviews with senior developers.

- However, to improve productivity, developers can ask AI to test, analyze pre-prod observability for the new feature, or identify risky code. A great example is the screencast we posted on enhancing code reviews using dynamic code analysis and observability data: https://www.youtube.com/watch?v=bFv-ptGvLo8

4. Don’t be afraid to play good agent / bad agent

- Agents to have a positive bias; that’s why it can be beneficial to provide two prompts with contradictory results. For example, one to write code, the other to find issues with it. If ‘success’ becomes finding problems and issues, the agent can’t channel its inner critic.

- Create a feedback loop between the two agents until they reach an agreement.

Dynamic Code Analysis and the key to reliable AI-generated code

Of the many different types of feedback introduced into the code generation process, the possibility of creating a feedback loop with real execution is the most promising and least utilized. Connecting the AI coding agent with real data that’s just sitting in wait in the APM dashboards, provides a missing context that makes suggestions more intelligent and targeted and allows a much deeper validation cycle.. Whether the agent is generating a new method, writing a test, or proposing a fix for a production issue, it has access to the same telemetry data your SREs and engineers do, with one advantage: It is always ready and available to leverage that data and analyze it.

Conclusion: The Dark Side of Gen AI Coding

AI-assisted development isn’t going away. But it’s time we stopped treating Gen AI like an infallible coding buddy and started holding it to the same standards we apply to human developers. That means testing its output, understanding its behavior, and validating it against real-world metrics.

Don’t let Gen AI run wild. Tame it with feedback. Empower it with data. And make sure your team stays in the driver’s seat.

Watch this insightful conversation with Eran Broder. Eran is one of the most interesting technologists we know, and the guy who (unsuccessfully) tried to convince us two years ago that gen AI will change everything… Now we believe him: https://www.youtube.com/watch?v=bPLWdzQBL1Q

Sign up for early access: Here